Datacenter Closure

Solution to automate Datacenter Closures using a Model Driven App, Power Automate, Azure Devops, and Power BI

January 16, 2021

Solution Description

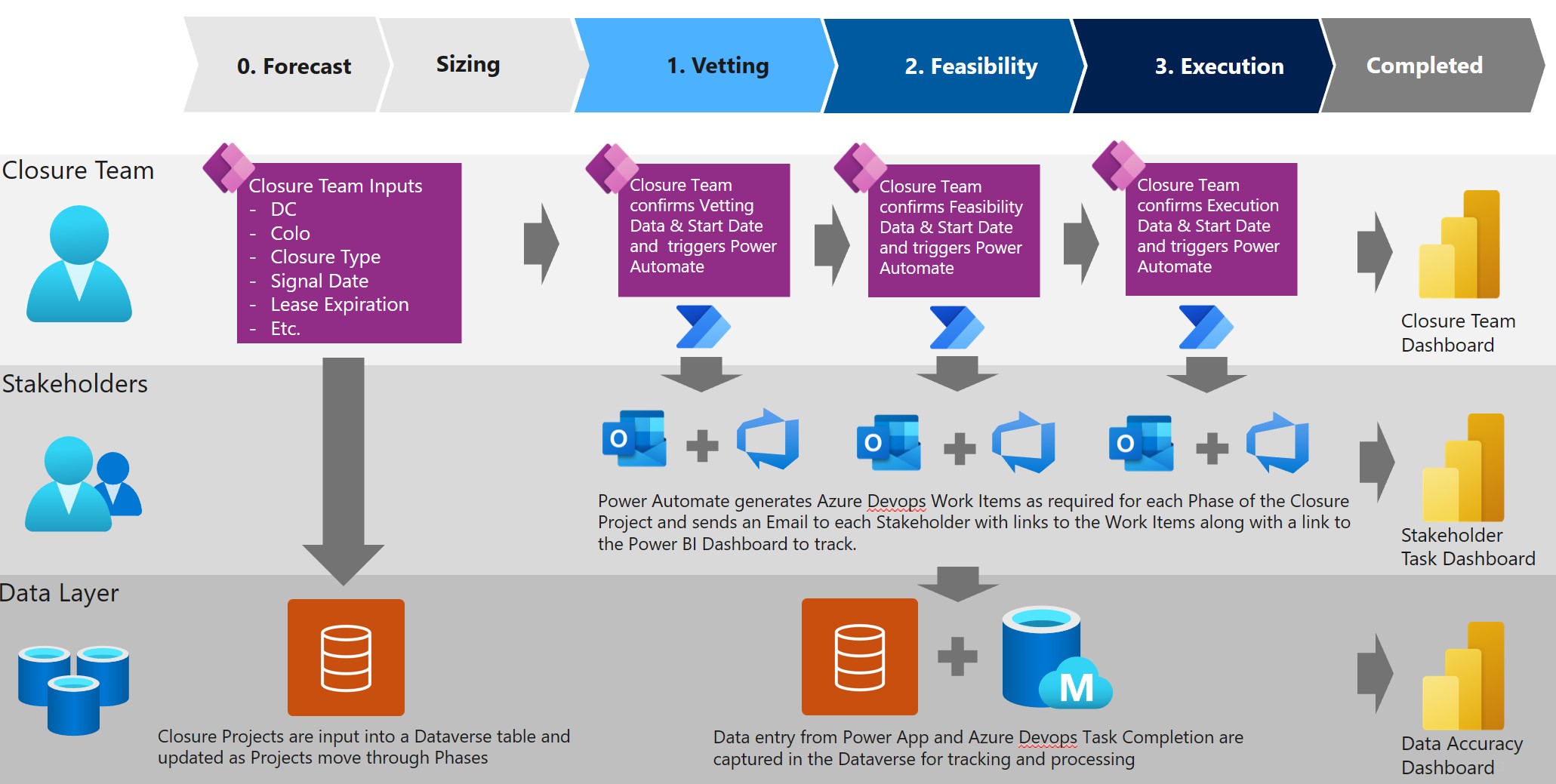

The DC Closure Team (DCCT) is responsible for the end-to-end project management of all Microsoft data center closure efforts. Each project requires the input of up to 24 different stakeholder groups across three phases: Vetting, Feasibility, and Execution. Throughout the past fiscal year, DCCT received feedback that a majority of stakeholders either prefer to work out of Azure Dev Ops (ADO) or have future plans to move to the platform.

This tooling release moves the location of those stakeholder inputs from GDCO App to Azure Dev Ops (ADO), while also creating a DC Closure project management portal and database via Power Apps. The project portal leverages Power Automate to initiate the Vetting, Feasibility, and Execution workflows while creating stakeholder ADO tasks. To ensure a seamless stakeholder transition, DCCT Power BI reporting has migrated to this new dataset.

E2E Diagram

Impact

- Support for all DC Closure project types, including Edge, Partial Colo, Microsoft-owned, and Leased colos, totaling 299 projects currently, and growing!

- Enables the DC Closure Team to respond quickly to feedback regarding task content, structure, and business requirements.

- Creates a centralized data repository for all DC Closure project information, removing dependency on email and manual spreadsheets.

- Improved date logic and reduction of human error by implementing system date validation for project details.

- Enables faster and more frequent refreshes of DC Closure datasets, ensuring stakeholders have accurate information.

- Builds the foundation for improved data validation and systematic automation of stakeholder inputs.

Interesting Aspect of this Solution #1

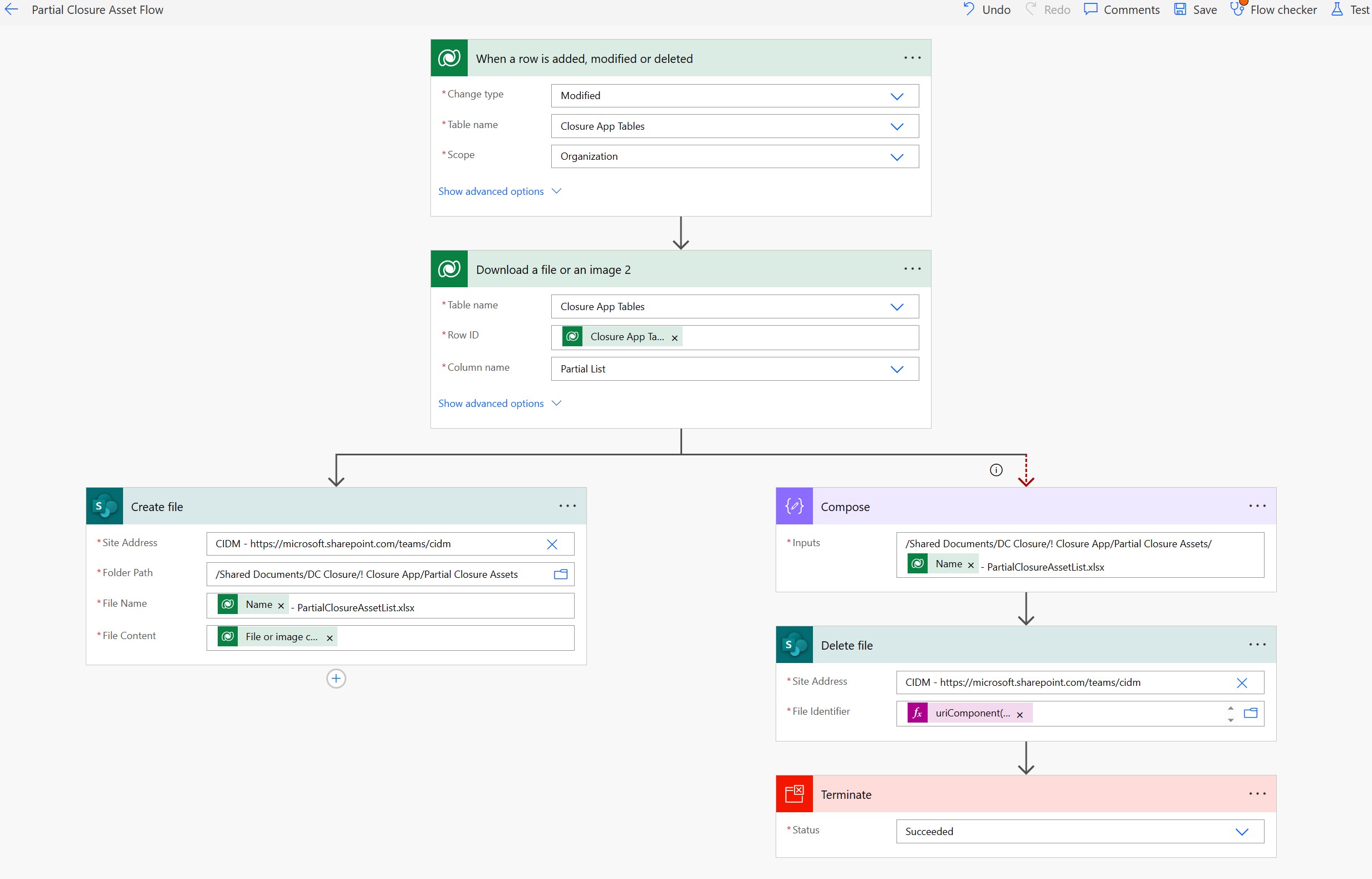

In order to accomodate Partial Closures, where not all of the assets of a Datacenter are impacted by a closure, the team requested that they be given the ability to upload an Excel file during project creation which only include those assets listed in the closure. The difficult part of this request to accomodate was to add/remove the file based on the Model Driven App input as well as to provide a repository to Copy to and Delete from automatically upon the UI Add/Remove, while keeping the Flow as simple as possible for speed. In order to do this I built the flow below that runs when an Excel file is added to a Dataverse table, it then tries to download it. The genius part is that from this step there are two parallel actions. If there is a file there, the Flow runs the left branch (success branch) and it is able to download the file and upload it into the Sharepoint Folder. If there is no file, hence the User deleted the file from the UI, the Flow still triggers but it fails on the Download since there is no file there. As a result it follows the right branch (failure branch) and removes the file that was previously in the Sharepoint folder. This enables 1 flow to perform two functions with minimal operations thus increasing the speed with which it can run. Furthermore, in order to delete the file, that is no longer present in the UI, the uriComponent function must be called to target that file specifically in the Sharepoint folder. Needless to say this flow is extremely efficient and gives a fantastic user experience.

{

"inputs": {

"host": {

"connectionName": "shared_sharepointonline_1",

"operationId": "DeleteFile",

"apiId": "/providers/Microsoft.PowerApps/apis/shared_sharepointonline"

},

"parameters": {

"dataset": "CIDM - https://microsoft.sharepoint.com/teams/cidm",

"id": "@uriComponent(outputs('Compose'))"

},

"authentication": "@parameters('$authentication')"

},

"metadata": {

"operationMetadataId": "1fda84a3-e72a-46fe-87b5-ee05738783a3"

}

}